Google Search Advocate John Mueller says core updates rely on longer-term patterns rather than recent site changes or link spam attacks.

The comment was made during a public discussion on Bluesky, where SEO professionals debated whether a recent wave of spammy backlinks could impact rankings during a core update.

Mueller’s comment offers timely clarification as Google rolls out its June core update.

Asked directly whether recent link spam would be factored into core update evaluations, Mueller said:

“Off-hand, I can’t think of how these links would play a role with the core updates. It’s possible there’s some interaction that I’m not aware of, but it seems really unlikely to me.

Also, core updates generally build on longer-term data, so something really recent wouldn’t play a role.”

For those concerned about negative SEO tactics, Mueller’s statement suggests recent spam links are unlikely to affect how Google evaluates a site during a core update.

The conversation began with SEO consultant Martin McGarry, who shared traffic data suggesting spam attacks were impacting sites targeting high-value keywords.

In a post linking to a recent SEJ article, McGarry wrote:

“This is traffic up in a high value keyword and the blue line is spammers attacking it… as you can see traffic disappears as clear as day.”

Mark Williams-Cook responded by referencing earlier commentary from a Google representative at the SEOFOMO event, where it was suggested that in most cases, links were not the root cause of visibility loss, even when the timing seemed suspicious.

This aligns with a broader theme in recent SEO discussions: it’s often difficult to prove that link-based attacks are directly responsible for ranking drops, especially during major algorithm updates.

As the discussion turned to mitigation strategies, Mueller reminded the community that Google’s disavow tool remains available, though it’s not always necessary.

“You can also use the domain: directive in the disavow file to cover a whole TLD, if you’re +/- certain that there are no good links for your site there.”

He added that the tool is often misunderstood or overused:

“It’s a tool that does what it says; almost nobody needs it, but if you think your case is exceptional, feel free.

Pushing it as a service to everyone says a bit about the SEO though.”

That final remark drew pushback from McGarry, who clarified that he doesn’t sell cleanup services and only uses the disavow tool in carefully reviewed edge cases.

Alan Bleiweiss joined the conversation by calling for Google to share more data about how many domains are already ignored algorithmically:

“That would be the best way to put site owners at ease, I think. There’s a psychology to all this cat & mouse wording without backing it up with data.”

His comment reflects a broader sentiment. Many professionals still feel in the dark about how Google handles potentially manipulative or low-quality links at scale.

Mueller’s comments offer guidance for anyone evaluating ranking changes during a core update:

If your site has seen changes in visibility since the start of the June core update, these insights suggest looking beyond recent link activity. Instead, focus on broader, long-term signals, such as content quality, site structure, and overall trust.

Too many marketing teams get trapped in endless feature comparisons when choosing core technology. They scrutinize capabilities lists, technical specifications and product demos without asking a more fundamental question: What will this help us accomplish?

Feature obsession leads to buyer’s remorse. Marketing teams invest in technically impressive platforms that fail to deliver practical value. Implementation drags, adoption struggles and ROI remain elusive. Why? Because ticking boxes doesn’t transform marketing operations.

Innovative marketing leaders flip the script. They define success first and then evaluate tools against those outcomes. This connects technology decisions to marketing and business priorities. It replaces abstract feature comparisons with concrete performance expectations across four critical dimensions:

This framework helps marketing teams cut through vendor hype and make confident technology decisions that deliver genuine transformation.

When selecting digital experience systems (DXP) platforms, marketing teams must look beyond feature sets to evaluate whether a solution will genuinely deliver results across four key dimensions.

Technology investments ultimately answer to business objectives. Three requirements deserve priority:

Market acceleration: Competition punishes delay. Marketing teams need tools that dramatically shrink the time between concept and deployment. Will this tool cut weeks from your production cycles? The best systems eliminate bottlenecks through intuitive interfaces, modular components and streamlined approval workflows that get experiences to market faster.

Operational adaptability: Market conditions shift constantly, so rigid systems quickly become liabilities. Evaluate whether a platform lets you reshape experiences without extensive development work. Can you adjust to unexpected opportunities or competitive moves without massive resource commitment? Adaptability at the content, design, and integration levels translates directly to business advantage.

Measurable value: Marketing technology must prove its worth. Set clear expectations for how investments will generate returns across different timeframes. Look for platforms with built-in measurement capabilities connecting marketing activities to business outcomes. This connection gives marketing leaders the evidence to show how technology investments drive revenue growth, reduce costs and mitigate risks.

Business impact requirements matter most because they connect technology choices to enterprise priorities. The right platform turns marketing into a more agile, responsive and accountable business function.

Dig deeper: How to choose the right marketing AI tools for real business impact

Business impact answers why your team needs new technology. MOps addresses how your team will work differently with it. Look for these transformative capabilities:

Component-based flexibility: Monolithic systems can’t keep pace with today’s marketing demands. Teams need the freedom to assemble and reassemble digital experiences from modular pieces. Could you reconfigure experiences without dependencies that slow you down? The best systems offer robust component libraries and flexible templates that let marketers respond immediately to emerging opportunities.

Practical personalization that scales: Your customers want experiences that feel made just for them. The problem is that most marketing teams hit a wall when trying to go beyond basic segments. Does the system make sophisticated personalization doable with your existing team? You need tools that allow marketers to build audience segments, craft conditional content, run tests and refine experiences without calling in the tech cavalry every time.

The right platform turns personalization from “nice idea, too complicated” into “we do this daily.” It fits your workflows rather than forcing you to reorganize around the technology.

Reduced technical dependencies: Nothing kills marketing momentum faster than technical bottlenecks. When routine changes require developer assistance, opportunities vanish while tickets languish in the queue. Evaluate whether a platform gives marketers appropriate independence while maintaining governance controls. Can they create pages, modify layouts, configure personalization and analyze results without technical help? The right tools let marketing teams focus on strategy and execution rather than technical coordination.

Rising customer expectations leave no room for subpar experiences. Three requirements guide smart platform selection:

Cross-channel coherence: Customers interact with brands across multiple touchpoints and expect seamless experiences. Evaluate whether a platform can maintain consistency across websites, mobile applications, commerce storefronts and emerging digital channels. Can you centrally manage experience elements while adapting appropriately to channel-specific requirements? The right systems provide component libraries and workflow management that ensure brand consistency without sacrificing channel optimization.

Speed and responsiveness: Slow, clunky experiences drive customers away. Performance directly impacts satisfaction, conversion rates and search visibility. Assess platforms across three dimensions:

Look for architecture-level performance optimization through edge delivery networks, server-side rendering and adaptive loading patterns. The best platforms treat performance as a core characteristic, not an afterthought.

Efficient market adaptation: Most marketing teams operate across multiple markets with distinct language, cultural and regulatory requirements. Evaluate whether a platform supports efficient localization that balances global brand standards with local relevance.

Does it provide translation workflows, content reuse mechanisms and market-specific customization capabilities? The right system reduces the operational complexity of managing global marketing operations.

Dig deeper: How to boost operational maturity with strategic martech selection

While marketing leaders might delegate technical evaluation to IT colleagues, understanding key architectural requirements helps ensure platforms serve immediate and long-term needs. These factors deserve attention:

Service-based architecture: Monolithic platforms constrain both marketing and technical flexibility. Evaluate whether a platform uses composable architecture with well-defined services accessible through standardized APIs.

True composability lets marketing teams start with essential capabilities and expand incrementally, avoiding risky all-at-once implementations.

Ecosystem connectivity: No marketing tool stands alone. Evaluate how well a platform connects with your broader marketing and enterprise technology landscape.

Robust integration options reduce implementation time and maintenance overhead while maximizing the value of existing technology investments.

Good luck hiring technical talent these days. When you do find great developers, the last thing you want is to waste their time on clunky platforms.

Ask tough questions about developer tools. Does the platform play nice with Git? Can your team automate testing instead of manually clicking through everything? Will deployment be a one-click affair or a prayer session?

Great platforms have real documentation (not just marketing fluff disguised as technical content), useful development kits, and a local setup that matches what’s in production. When your developers aren’t fighting the platform, they build faster, break less stuff and stick around longer. That’s something marketing and IT can both celebrate.

Dig deeper: Big players vs. niche specialists: Choosing your martech vendors

No platform excels equally across all requirements. Marketing teams must prioritize based on their business context, operational challenges and technical environment. Consider this:

Strategic priority mapping: Connect requirements directly to strategic initiatives. If improving customer acquisition efficiency tops your priority list, personalization capabilities and marketing autonomy may deserve greater weight than localization support or architectural composability.

Pain point identification: Current operational headaches often signal where transformation will deliver the most significant impact. If your team struggles with development bottlenecks, independence and agility requirements may matter more than integration options or performance optimization.

Technical reality check: Your organization’s technical capabilities influence which requirements deserve attention. Teams with limited development resources prioritize marketing independence and pre-built integrations, while those with sophisticated engineering talent emphasize composability and developer experience.

By balancing these perspectives, you can develop a weighted requirement model that reflects your unique context and guides technology selection.

Even the perfect platform on paper can fail without proper implementation planning. I think you’ll agree that these factors deserve special attention:

Change management strategy: New tools inevitably disrupt established workflows and organizational structures. Evaluate technical capabilities and vendor support for change management, including implementation methodology, training resources and partnership approach. The best vendors recognize that technology adoption requires organizational adaptation.

Incremental value delivery: Big-bang implementations rarely succeed. Assess whether platforms support gradual adoption that delivers quick wins while building toward comprehensive transformation. Effective implementations follow a progression from simple use cases to more sophisticated capabilities, creating momentum through visible success.

Clear success metrics: Define specific KPIs across all four dimensions — business, marketing, customer experience and technology. Establish measurement approaches that connect platform capabilities to organizational outcomes. These metrics help validate investment decisions and guide ongoing optimization.

Core martech investments shape your capabilities for years. Beyond current requirements, evaluate how platforms will support continuous evolution as priorities shift, customer expectations evolve and technologies advance. Consider these forward-looking factors:

By selecting core martech tools through this outcome-driven framework, marketing teams position themselves for immediate success and long-term evolution. This approach ensures technology investments enable marketing transformation rather than creating expensive digital paperweights.

Dig deeper: Beyond quadrants: An alternative approach to martech selection

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. MarTech is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.

Faces of recombinated features, his most striking static piece features hollowed eyes and a haunting grimace, hunched into a crouched body in contrasts of red and blue. This oil and acrylic on canvas, titled Taking Cover, is in a resignified violence – it is depicted not in stark photorealism, but emits such an energy that, by Yosef’s hand, oil and acrylic become blood and flesh. This piece was a part of a wider showing in Harsh Roads, a collection exhibited at Lisbon’s Prisma Studio, alongside standouts Two Snakes, lino print on fabric, and Hyena, oil pastel and acrylic on canvas.

Harsh Roads draws inspiration from Yosef’s conversations with his father, this dialogue serving as a window into his life growing up in Palestine and the years thereafter. Taking these recordings, Yosef used pen and paper to draw each frame by hand. He says, “A huge goal of mine is to make work that is personal feel universal, and I think this piece speaks to that”. An ambition for this project is to animate his father’s story into short film format. Having moved back to Dublin after some time in London, Yosef’s family home becomes another point of gathering – “My dad loves gardening, and has made it a vibrant space full of colour and ever evolving inspiration,” he says. Connections are at the heart of Yosef’s artistic process.

Preservation and archiving is core to Yosef’s work – in Palestine’s attempted cultural erasure, to archive is to bear witness. In Yosef’s animation, a short snippet posted on his Instagram, his father narrates the connection between his wife, Yosef’s mother, and his own mother. Between hanging pauses caught in nostalgia, we hear Yosef’s father tell us how his mother had surprised him. He was not married to his wife, Caroline, yet his mother says “ I am your witness, God is your witness. You are married.” He continues, “For actually an old traditional Palestinian lady to say what she said, I thought that was something really special.”

Visually narrated in graphite illustrations of geometric fauna, almost like embroidery in lace cloths draped over dinner tables, this animation truly captures a deep warmth. Illustrations wash in and out like rippled water, swishing into flower wreathes doubled as embraces and family members rendered by Yosef’s pencil. With no digital captions, Yosef chooses to subtitle the animation in pencil, extending its universe even further to bring you deeper into the story at hand. These figures are not static either, with frames layered and altered ever-so-slightly to create snapshot movements. Moments are given room to sprawl themselves out; Yosef’s father is given sacred space to be canvassed soft.

For Yosef, there’s something to say about the junction between analogue methods and temporal stretching. Illustration opens up a new avenue for ways of storytelling – “In a culture and industry that is increasing prioritisation of the quick and temporary, I think there is value in producing something that demands a pause.” The canvas acts as a vessel, a bag full of things he’s collected and memories passed down. Therefore, via illustration, the stories within Yosef’s family can unfold and be shared. Quite literally, a spotlight is drawn onto familial ties. Yosef says, “A huge goal of mine is to make work that is personal feel universal, and I think this piece speaks to that. I was waking up excited to start on it each day.”

Is your SEO strategy ready for Google’s new AI Mode?

Is your 2025 SERP strategy in danger?

What’s changed between traditional search mode and AI mode?

Watch our webinar on-demand as we explored early implications for click-through rates, organic visibility, and content performance so you can:

In this actionable session, Nick Gallagher, SEO Lead at Conductor, gave actionable SEO guidance in this new era of search engine results page (SERPs).

Get recommendations for optimizing content to stay competitive as AI-generated answers grow in prominence.

Don’t wait for the SERPs to leave you behind.

Watch on-demand to uncover if AI Mode will hurt your traffic, and what to do about it.

View the slides below or check out the full webinar for all the details

Register now to learn how to stay away from modern SEO strategies that don’t work.

As brands step up their podcast marketing spend, podcast companies are putting on more live events to meet the growing demand.

In 2024, SiriusXM dipped its toes into live podcast events for the first time, organizing 10 live podcast events over the course of the year. So far in 2025, the company has already held 18 live podcast recordings, with plans to double or triple its overall number of live podcast events by the end of the year.

SiriusXM’s live podcasts, whose audiences typically involve 40 or 50 fans, are not ticketed events. However, they represent a growing revenue stream for the company thanks to sponsorships by advertisers such as Hershey’s and Macy’s, with SiriusXM holding the events at both the company’s in-house recording studio in its New York City headquarters and at venues such as Avalon Hollywood. So far this year, SiriusXM Media has more than doubled the number of sponsors and corresponding ad revenue for its live podcast events, according to svp of strategic solutions Karina Montgomery, who declined to give exact figures but said that “ad revenue from these events is already up 160 percent as of June 2025, compared to all of 2024.”

SiriusXM frames its live podcast events as a premium sponsorship opportunity, giving brands a chance to connect with particularly engaged fans while still showing up as a sponsor in any audio and video content produced or streamed from the event. In addition to benefiting from the usual ad reads, podcast event sponsors are able to place their logos and branding around the recording studio and live audience, with opportunities for in-person activations such as branded coffee bars for attendees.

“You’re not necessarily going to garner scale from the experience in the room, but we often create additional bonus content that our podcasters put into the RSS feed, or on YouTube, that the sponsor is incorporated into,” said SiriusXM svp of podcast content Adam Sachs. “So they get the benefit of both of these worlds.”

SiriusXM is not the only podcast company that has stepped up its live event business in 2025. In 2023, Vox Media produced five sponsored live podcast tapings; last year, the company put on 19 sponsored podcast events. In the first six months of 2025 alone, Vox Media has produced 21 live podcast recordings. Some of these events were ticketed, and others were free, but they all prominently featured sponsors such as Smartsheet and Bulleit Whiskey, the latter of which was a first-time sponsor for Vox Media.

“Typically, experiential can be a heavy lift,” said Vox Media CRO Geoff Schiller. “As it relates to other live activations, live podcast tapings are a very light lift, so it’s also a sweet spot for brands.”

The surge in the number of live podcast events in 2025 reflects a broader shift: advertisers are betting bigger on podcasts — not just as an audio channel but as a full-fledged creator economy play. With the rise of video podcasting and hosts increasingly viewed as influencers, brands see podcasts as a high-impact way to reach loyal and engaged audiences and extend their podcast presence beyond the feed. John Newall, the svp of marketing of Smartsheet — which sponsored a Vox Media podcast event at South By Southwest in March — said that his company’s overall marketing spend on podcasts had increased year-over-year between 2024 and 2025, citing the live recordings as a significant selling point. (Schiller declined to specify the prices of Vox Media’s live event inventory, but said that the company’s live events typically command a higher rate than standard ad reads.)

“We had a coffee bar outside, so when people were lining up before or after they went, they could go grab a coffee on us — which enabled us to capture leads,” he said.

Hershey’s vp of media and marketing technology Vinny Renaldi said that live podcast events were “a rapidly growing part of our integrated marketing and content strategy,” rather than simply an extension of the company’s pre-existing podcast partnerships. He did not provide numbers.

“They allow us to build deeper, more tangible connections with both the podcasts we partner with and their communities,” he said.

Live podcast events are a growing trend for both podcast companies and individual podcast creators. Theo Gadd, a co-founder of the live podcast event platform and agency PodLife, said that the number of events that he had posted on his platform, which tracks live podcast events, had grown considerably year-over-year in the two years since he started his company, although he did not share specific figures. At the moment, Gadd said, just over 50 percent of the events featured by PodLife are sponsored.

“If the show has a pre-existing sponsor, we always tell them, ‘go talk to them; let them know what you’re doing,’” Gadd said. “Because events are such a nice touchpoint, and brands are really keen to get involved.”

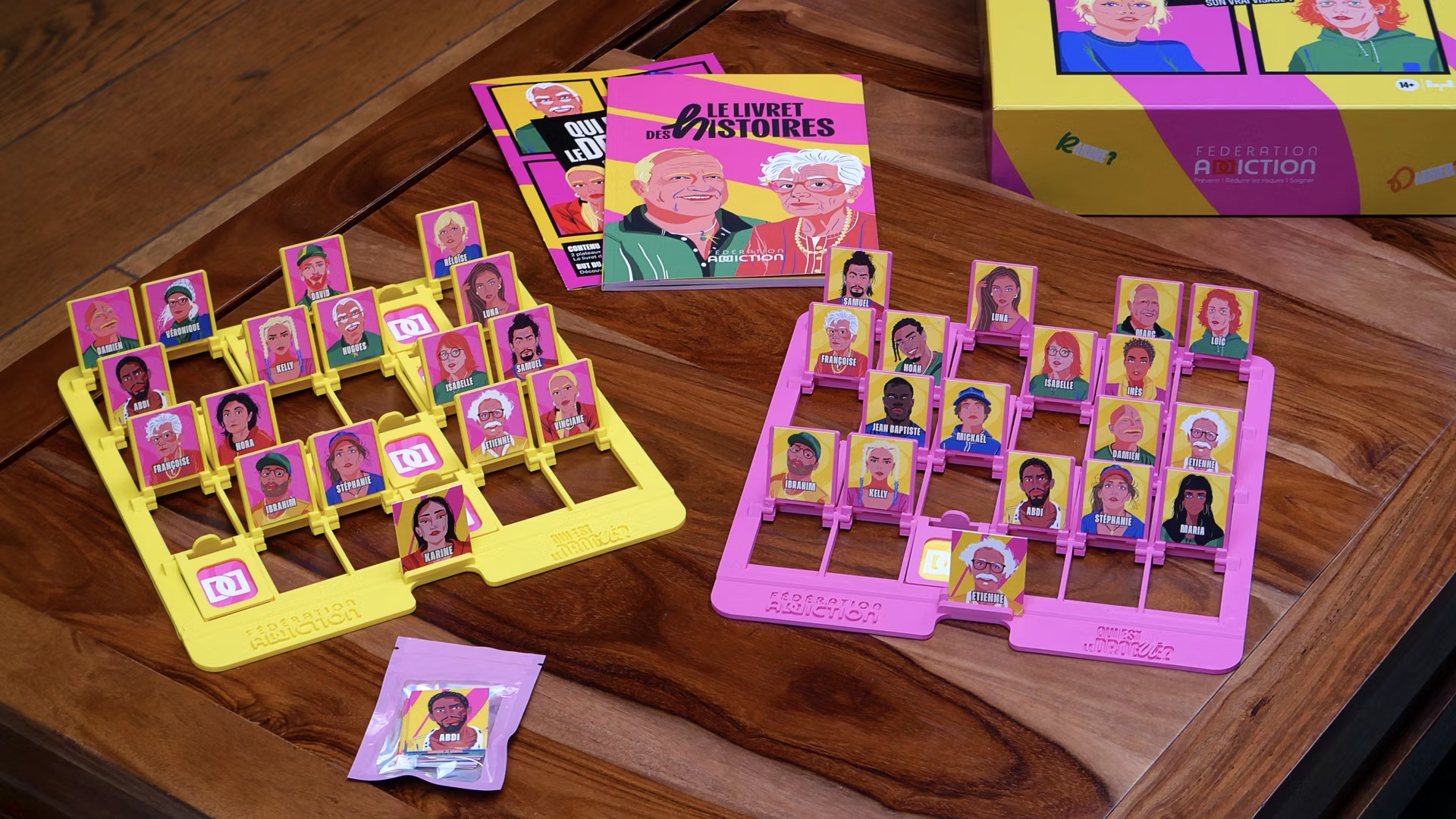

If we think back to the most iconic board games from our childhoods, many of us look back fondly at the humble two-player masterpiece, Guess Who. Reviving a slice of nostalgia, Fédération Addiction have put a thoughtful spin on the classic to redefine addiction stereotypes and challenge societal prejudice.

From billboard ads to TikTok trends, nowadays, there are countless ways to captivate an audience, but touching on nostalgia is almost always a winner. Putting a thoughtful spin on a childhood classic, Fédération Addiction’s campaign is an emotionally resonant piece that shines in its juxtaposition.

(Image credit: Orès France/Fédération Addiction )

You may like

(Image credit: Orès France/Fédération Addiction )

(Image credit: Orès France/Fédération Addiction )

Conversion tracking tends to be one of those things advertisers set up once and then forget about, until something fails – big time.

But in my 16 years of experience running Google Ads, I can confidently say it’s the single most important factor affecting PPC results. Way before campaign failure, when results first start lagging, faulty conversions are almost always to blame.

So, whether you want to improve performance, or save a campaign that’s heading towards collapse, the starting point should be the same. Check your conversion data.

Conversion data will only be useful for you if it’s accurate. Serious missteps can happen if you rely on Google Ads to optimize performance when it has misleading or incomplete conversion tracking.

If your numbers are wrong, you’ll end up scaling the wrong campaigns, pausing the ones generating a positive return, or having a wrong idea of return on ad spend (ROAS) altogether – and this happens more often than you think.

Here are seven of the most common causes of inaccurate or inconsistent conversion data in Google Ads, and what you can do to fix each one.

Conversion tracking is often missing, duplicated, or firing in the wrong place. This is still one of the most common issues, and it can be the most damaging.

For example, you may track a thank-you page where users refresh the screen three times. Your backend will have one sale, but in Google Ads, you’ll see three.

Using reports like Repeat Rate is a great way to catch that error and ensure you fix it sooner rather than later.

When tracking is unreliable, it’s impossible to optimize performance accurately. Campaign decisions are made on incomplete signals, and smart bidding models won’t have the data they need to learn effectively.

Start by ensuring your conversion actions in Google Ads are appropriately defined.

Use Google Tag Manager to centralize tracking across pages and platforms, and confirm accurate tag firing using Google’s Tag Assistant or built-in diagnostics.

Not all user actions are created equal – at least not when it comes to Google Ads optimization.

Metrics like scroll depth, time on site, or video engagement can be helpful, but they shouldn’t be treated as primary conversion events in your ad account.

These types of interactions are better as supporting metrics (secondary conversions). They can offer insights into how users engage with your landing page or website.

This type of information is valuable, but it does not belong to the core set of conversion actions used to drive bidding decisions in Google Ads.

When Google optimizes towards actions that don’t directly tie to revenue or qualified leads, you risk directing your budget towards activities that look great on a dashboard but don’t move the needle in your business.

Instead, focus on tracking high-intent actions in your Google Ads account, like purchases, form submissions, or phone calls, and use the supporting metrics to help improve the user experience.

Discrepancies between platforms are expected, but that doesn’t mean they should be ignored. It’s common to see Google Ads report one number and Google Analytics 4 report another for the same conversion event.

The root cause typically comes down to attribution model differences, reporting windows, or inconsistent event definitions.

To reduce confusion, first ensure your Google Ads and GA4 accounts are correctly linked. Then, audit the attribution models in both platforms and understand how each system defines and credits conversions.

GA4 uses data-driven attribution by default, whereas Google Ads may still be using last-click or another model (but now defaults to data-driven models for most accounts). Align conversion settings as much as possible to maintain consistency in your reporting.

Google Ads can’t attribute conversions to a specific click if the GCLID isn’t passed through correctly, which will cause in-platform results to be lower.

This issue tends to result from redirects, link shorteners, or forms that strip URL parameters.

Fixing it starts with enabling auto-tagging in your account. Then, confirm that the GCLID is retained throughout the user journey, especially when forms span multiple pages or involve third-party integrations.

Customer relationship management (CRM) systems and custom landing pages are often the culprits, so work with your developers to make sure GCLID values persist and aren’t overwritten.

Unfortunately, privacy compliance has introduced new gaps in attribution. If a user declines consent, Google’s tags may not fire, leaving conversions untracked.

This is particularly relevant in regions governed by GDPR, like the EU, and similar regulations.

Consent Mode helps to bridge the gap. It adjusts how tags behave based on user permissions, allowing for some modeled data even without full cookie acceptance, making it a great solution.

Pair that with first-party data strategies and server-side tagging where appropriate.

Note, modeled conversions may take time to appear and don’t fully restore lost data, especially for smaller datasets or stricter consent regimes. But, it will help fill in the blanks responsibly.

Offline conversions – like phone sales or in-store transactions – can be imported into Google Ads.

But if you’re inconsistent with your upload process or if it lacks the proper identifiers, those conversions won’t map to the original ad click.

Set up a schedule to upload offline conversions regularly, ideally on a daily or weekly basis. Include GCLID information and a timestamp with each entry to preserve click-level attribution.

Once the data is uploaded, monitor for errors inside the Google Ads interface. Minor mismatches in format or missing fields can stop conversions from registering entirely.

Even when tracking is conceptually correct, technical issues can block it from functioning.

Conflicting scripts, outdated plugins, or misplaced tags can all prevent conversion events from firing properly. These problems often go undetected until someone audits the data or sees a sudden drop in conversions.

Use Tag Assistant or Google Tag Manager’s Preview Mode to audit your implementation regularly.

Avoid conditional loading unless absolutely necessary, and coordinate with developers when other platforms – like Meta, HubSpot, or Salesforce – are active on the same pages.

Conversion tracking doesn’t exist in a vacuum, and it’s your job to make sure it plays well with the rest of your stack.

Incomplete conversion data is a strategic liability. Feeding Google Ads AI the right signals can mean the difference between PPC growth and stagnation.

By consistently auditing your setup and addressing these common issues, you’ll build cleaner data, glean better insights, and track your way to better performance.

More Resources:

Featured Image: TetianaKtv/Shutetrstock

Amazon is making its biggest bet this year on its annual sales palooza, turning the two-day Prime Day event into four days, from July 8 to 11.

Brands are preparing for a Prime Day sales spike by increasing ad budgets to promote their deals, and Amazon sales reps are trying to juice ad spend by making tailored recommendations to specific brands around the event.

Yet, brands are also more cautious this year compared to previous years, because of looming tariffs and a likely pullback in consumer spending.

“The length of the event is two times longer than it was last year, but that doesn’t mean all of our clients are doubling up on the volume of sales they’re expecting,” said Joe O’Connor, senior innovation and growth director at ad agency Tinuiti, which anticipates 10% to 25% year-over-year increase in Prime Day ad spend. “The economic environment is weighing on both consumers and brands.”

In the weeks leading up to Prime Day, Amazon sales representatives briefed marketers on how much they should spend to promote their Prime Day sales to stick out from the clutter.

According to a pitch deck sent to an agency and viewed by ADWEEK, Amazon this year recommended that a brand increase their daily ad spend by 25% in the days leading up to Prime Day to build awareness and find new customers.

During Prime Day, Amazon recommended increasing daily ad spend by 100%. And in the days after Prime Day, Amazon recommended a 25% increase in daily budgets to retarget people who bought products, or people who looked but didn’t buy.

These recommendations amounted to a quarter of the brand’s monthly spend every day of Prime Day, the source who was presented the pitch deck said. Last year, this source spent 22% of their monthly budget on each of its two days, equivalent to 44% of their monthly spend.

Tinuiti’s O’Connor was not presented Amazon’s pitch deck but said that the budget breakdowns “are not terribly far off from what our team would recommend.” He added that budgets vary significantly for individual advertisers.

According to Amazon Ads, the recommendation in the pitch deck was tailored for a specific advertiser and does not apply to all advertisers.

A spokesperson for Amazon Ads said that Prime Day is “a proven opportunity to increase brand awareness, consideration, sales, and engender longstanding customer relationships,” adding that Amazon works with advertisers to develop Prime Day ad recommendations.

While some advertisers adhere to Amazon’s recommended levels of spending, others are buying ads away from Amazon, said Hillary Kupferberg, VP of performance media at Exverus Media. With retailers like Walmart and Target offering similar deals during Prime Day, competition for ad budgets is increasing, she said.

Some advertisers are also reluctant to splurge on Prime Day because the event is sandwiched between Fourth of July sales and back-to-school shopping, Kupferberg said. Consumers could be fatigued by the onslaught of deals.

“It reinforces how cluttered we are and how much competition there is,” Kupferberg said. “Everyone understands that it’s an uncertain economic time, but nobody is quite sure how that will play out.”

Mike Feldman, svp of commerce at Flywheel, suggested that the timing of Prime Day this year could help Amazon boost sales heading into the holidays, when some experts expect a pullback in consumer spending.

“Amazon is going to try to maximize their inflationary impact,” he said. “Shoppers have more time to make purchases—this is to make sure that they win Q3 and mitigate risk heading into the holidays.”

Brands also continue to heavily scrutinize their ad spend due to economic concerns.

“The ultimate thing that we’re hearing is that the ad budgets need to be more effective than ever before,” Feldman said.

He added that the payoff with Prime Day can be substantial for brands looking to get ahead of holiday sales.

“If you win Prime Day, you win ranking and could be competitive for the rest of the year,” he said.

Cloudflare’s new “pay per crawl” initiative has sparked a debate among SEO professionals and digital marketers.

The company has introduced a default AI crawler-blocking system alongside new monetization options for publishers.

This enables publishers to charge AI companies for access, which could impact how web content is consumed and valued in the age of generative search.

The system, now in private beta, blocks known AI crawlers by default for new Cloudflare domains.

Publishers can choose one of three access settings for each crawler:

Crawlers that attempt to access blocked content will receive a 402 Payment Required response. Publishers set a flat, sitewide price per request, and Cloudflare handles billing and revenue distribution.

Cloudflare wrote:

“Imagine asking your favorite deep research program to help you synthesize the latest cancer research or a legal brief, or just help you find the best restaurant in Soho — and then giving that agent a budget to spend to acquire the best and most relevant content.

The system integrates directly with Cloudflare’s bot management tools and works alongside existing WAF rules and robots.txt files. Authentication is handled using Ed25519 key pairs and HTTP message signatures to prevent spoofing.

Cloudflare says early adopters include major publishers like Condé Nast, Time, The Atlantic, AP, BuzzFeed, Reddit, Pinterest, Quora, and others.

While the current setup supports only flat pricing, the company plans to explore dynamic and granular pricing models in future iterations.

While Cloudflare’s new controls can be changed manually, several SEO experts are concerned about the impact of making the system opt-out rather than opt-in.

“This won’t end well,” wrote Duane Forrester, Vice President of Industry Insights at Yext, warning that businesses may struggle to appear in AI-powered answers without realizing crawler access is being blocked unless a fee is paid.

Lily Ray, Vice President of SEO Strategy and Research at Amsive Digital, noted the change is likely to spark urgent conversations with clients, especially those unaware that their sites might now be invisible to AI crawlers by default.

Ryan Jones, Senior Vice President of SEO at Razorfish, expressed that most of his client sites actually want AI crawlers to access their content for visibility reasons.

Some in the community welcome the move as a long-overdue rebalancing of content economics.

“A force is needed to tilt the balance back to where it once was,” said Pedro Dias, Technical SEO Consultant and former member of Google’s Search Quality team. He suggests that the current dynamic favors AI companies at the expense of publishers.

Ilya Grigorik, Distinguished Engineer and Technical Advisor at Shopify, praised the use of cryptographic authentication, saying it’s “much needed” given how difficult it is to distinguish between legitimate and malicious bots.

Under the new system, crawlers must authenticate using public key cryptography and declare payment intent via custom HTTP headers.

As Cloudflare’s new default settings take effect, there’s concern around losing visibility in AI search tools. But you have options to regain control.

AI traffic may decline sharply if your domain blocks AI bots without you realizing it.

Himanshu Sharma, digital analytics consultant and founder of OptimizeSmart, warned on X:

“Expect a sharp decline in AI traffic reported by GA4 as Cloudflare blocks almost all known AI crawlers/bots from scraping your website content by default.”

Sharma advised site owners to proactively review their Cloudflare settings:

This option allows you to decide whether you want to maintain visibility on AI-driven platforms or limit the use of content for training and responses.

Cloudflare’s pay-per-crawl system formalizes a new layer of negotiation over who gets to access web content, and at what cost.

For SEO pros, this adds complexity: visibility may now depend not just on ranking, but on crawler access settings, payment policies, and bot authentication.

While some see this as empowering publishers, others warn it could fragment the open web, where content access varies based on infrastructure and paywalls.

If generative AI becomes a core part of how people search, and the pipes feeding that AI are now toll roads, websites will need to manage visibility across a growing patchwork of systems, policies, and financial models.

Featured Image: Roman Samborskyi/Shutterstock

When it comes to data, marketers have focused primarily on the quality of the structured data in their CRM and marketing automation platforms over the past 15 years.

In my March article, I discussed why current data governance and management processes must be revisited. This time, I will maintain my focus on unstructured data by diving into the challenges of customer satisfaction surveys.

We’re all familiar with customer satisfaction surveys. For our purposes here, there’s no need to differentiate between an NPS (Net Promoter Score), five-point rating or similar customer experience metrics. Instead, we’re going to focus on the free text box, which is often the last part of these surveys.

How we got here

Whether you are directly responsible for customer surveys or you’re partnering with a customer success team, extracting actionable insights from free-text comments is a common challenge.

There are several reasons for this.

The platform-related challenge: Today’s survey tools range from form-only solutions like Google Forms and Typeform, to full survey solutions like SurveyMonkey, and full enterprise customer experience platforms like Qualtrics and Medallia.

Process-related challenges: There is a range of different survey approaches, resulting in different data formats and different roles responsible for analyzing survey data.

People-related challenges: These include prioritizing voice of customer (VOC) programs relative to other initiatives, properly analyzing unstructured comments, and relying on quantitative metrics that are simply easier to measure, such as year-over-year trends.

In the automation era, we might also be guilty of over-surveying, which leads to even more data — a problem of volume and velocity.

Dig deeper: How to develop a customer marketing strategy from scratch with Google Gemini

Many survey tools and customer experience platforms have embedded algorithms and natural language processing (NLP) capabilities to conduct some analysis. Think of NLP and corresponding algorithms as keyword matching on steroids. They use the surrounding context to help structure the unstructured free-text feedback into various sentiment categories, like positive, neutral or negative.

Some platforms also included human-in-the-loop feedback cycles that ask users to help categorize feedback, particularly if there was additional context in the free text box.

Because this functionality is often embedded in the survey tools, there are a few potential issues:

Thanks to generative AI tools like ChatGPT and Gemini, today we all have a black box at our fingertips and can use it to test and validate sentiment categorizations. We can go beyond the positive, negative or neutral rating by probing deeper to understand the specifics.

If your team finds the cost of a full-featured customer survey and analysis platform too steep for its budget, you could even build a DIY solution.

Let’s examine how I put this approach to the test.

First, I created a set of synthetic customer data using ChatGPT to avoid any data privacy concerns. I prompted it to provide a sample of customer data records that engaged with an online retailer, including the customers’ overall satisfaction and open-text box feedback.

A sample of synthetic customer data generated by ChatGPT.

I then uploaded the file into Google Sheets and clicked on the “Analyze this data” button, which puts Gemini’s AI model directly into Sheets.

Within seconds, Gemini generated a top-line summary of qualitative results with broad-based trends to characterize the data.

Without leaving Google Sheets, the embedded Gemini chat asked me if I’d like to delve deeper.

This inline capability demonstrates why the new DIY approach will be so impactful. It’s no longer about separate tools, as I literally chatted with my data to delve deeper with each prompt, like this:

I know what you’re thinking: Shouldn’t I verify this myself?

Yes, but this top-level summary gave me a starting point. It’s no different from what I’d receive from an entry-level analyst (which I’d also want to verify). The critical difference was my ability to do this directly inline, without leaving my data sheet and analysis flow.

I then simulated a similar test. I uploaded the data directly to Gemini and prompted it to do a more complete sentiment analysis.

If you’ve used some of the newer LLM models, you’ve seen how they show their thinking to users. In this case, that’s a critical dimension, as it provided me with its sentiment classification method.

Survey platforms with embedded analysis tools might link unstructured data feedback to the overall satisfaction score. I asked my LLM to determine the sentiment exclusively on the text without anchoring it to the overall rating.

The LLM identified four specific examples in which the anchored sentiment differed from the direct sentiment and why. This could spark other customer service or follow-up actions, all within minutes and within my control.

If you’re currently using a full-featured survey and analysis platform, this exercise might raise new questions about what embedded algorithms do and how they do it. Alternatively, if you run surveys outside of a platform, you’re more capable than ever of separating the collection and analysis of data.

For SMBs, this exercise demonstrates a cost-effective, DIY approach to survey collection and analysis. These teams can leverage basic forms solutions (e.g., Google Forms) instead of full survey platforms.

Data privacy and confidentiality.

It would be irresponsible to conclude this exercise without addressing one of the major reasons these approaches may be limited initially: the lack of clarity regarding data privacy and confidentiality guidelines.

Most experts recommend teams conduct this type of analysis only within the walled garden of their organization’s Team or Enterprise versions of ChatGPT, Microsoft CoPilot or Google Gemini Workspace, based on data retention and do-not-train-the-model settings.

Teams should consult their organization’s legal, compliance, and IT policies before placing sensitive data in an AI/LLM platform. Of course, many individuals may be experimenting already, which leads to the critical step of revisiting your organization’s data governance policies. The latest wave of “chat with CRM” connectors will accelerate these concerns and heighten the need to adjust compliance frameworks.

Although it’s still early, I am encouraged by these early tests of “chatting with data.” Like every other AI-infused trend, the technology has outpaced our ability to adjust processes and platform management. However, when analyzing free-text feedback becomes comparable to running quantitative summaries, my hope is that we can flip our feedback processes to focus more on what they said rather than only how they rated us.

Dig deeper: Why AI-powered customer engagement projects fail before they start

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. MarTech is owned by Semrush. Contributor was not asked to make any direct or indirect mentions of Semrush. The opinions they express are their own.